In the final part of this series on column-level encryption, I explain how to work with encrypted columns in Amazon Redshift Serverless and decrypt specific fields on the fly using an AWS Lambda function written in Go. By combining the scalability of Redshift with the flexibility of Lambda, you can securely access sensitive data without compromising performance or security best practices.

Why Redshift and Lambda?

Amazon Redshift is an excellent choice for analytics on sensitive data because of its performance and scalability for large datasets. When working with sensitive information, such as SSNs or credit card numbers, encryption is essential for compliance and security. AWS Lambda makes it possible to decrypt this data dynamically for specific use cases, like table joins. Writing the decryption logic in Go ensures fast and efficient execution.

Here’s how to get started:

- Set up Redshift Serverless and load encrypted data.

- Configure AWS Lambda for decryption using a Go function.

- Query and decrypt specific columns.

Step 1: Set up Redshift Serverless and Load Encrypted Data

Configuring Redshift Serverless

Begin by creating a workgroup and a namespace in Amazon Redshift Serverless. The workgroup manages compute resources, while the namespace stores metadata and configurations.

To get started, I used the Try Redshift Serverless free trial wizard.

Creating an IAM Role for Redshift

Create an IAM role for your Redshift setup with the following permissions:

- S3 access: To load data from an S3 bucket. Ensure you select the correct bucket.

- AWS Lambda invoke permissions: To call an external function for decryption.

- KMS Decrypt: To access the KMS key used for data encryption.

For this article, I used the AWS Management Console to create the IAM role and assigned maximum privileges. In a production environment, apply the least privilege principle by removing any permissions that aren’t needed.

This IAM role will be referenced later during data loading and external function setup.

Load and Query Encrypted Data in Redshift

Ensure your encrypted dataset is stored in an Amazon S3 bucket. If you don’t have this dataset, you can generate it using the AWS Glue job from earlier posts. Encrypted columns should follow this format:

ENCRYPTED_DEK_BASE64::ENCRYPTED_VALUE

To load this data into Redshift:

-

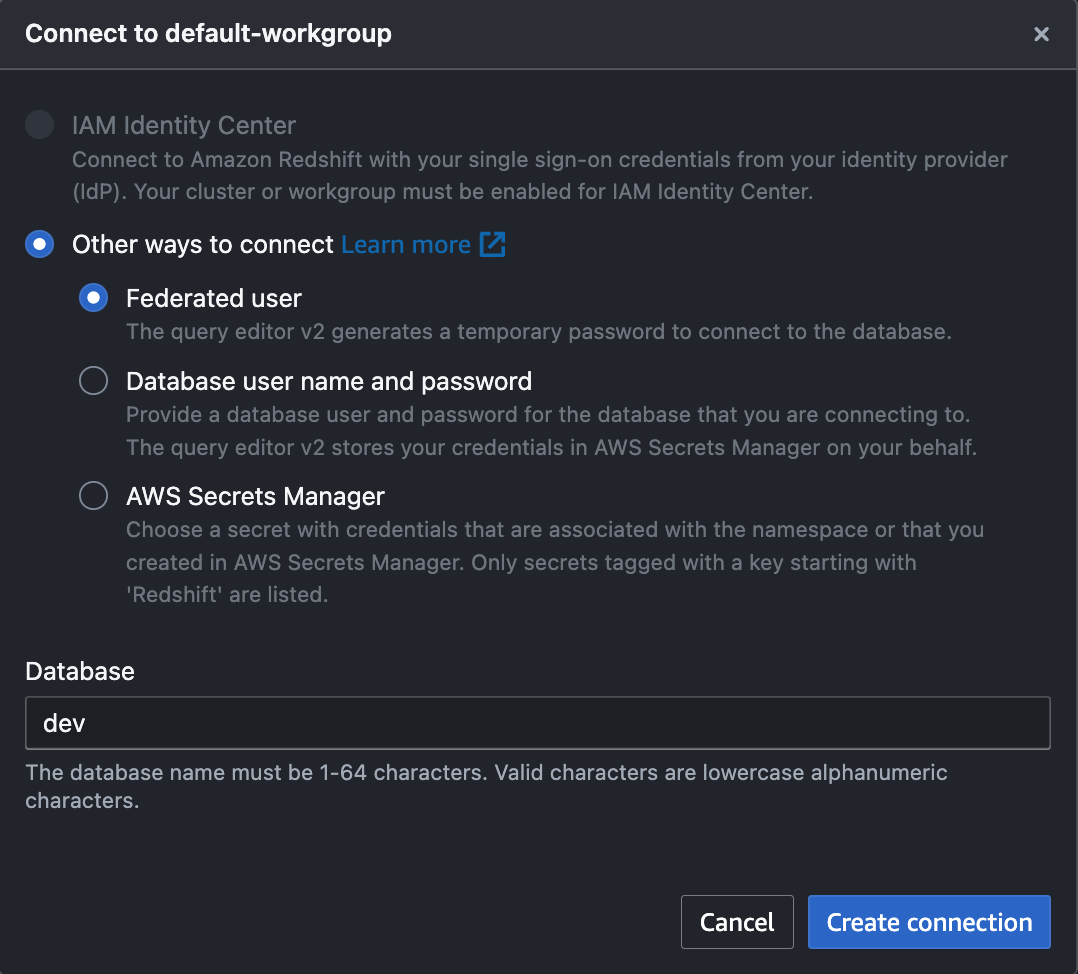

From the Redshift console, select Query editor v2 in the left pane.

-

Leave the defaults unchanged and choose Create connection.

Query editor v2 connection pop-up -

Create a Table in Redshift. The table schema should match the structure of your encrypted dataset:

-- Create TABLE

CREATE TABLE public.encrypted_data (

first_last_name character varying(256) ENCODE lzo,

ssn character varying(65535) ENCODE lzo,

credit_card_number character varying(65535) ENCODE lzo

) DISTSTYLE AUTO;

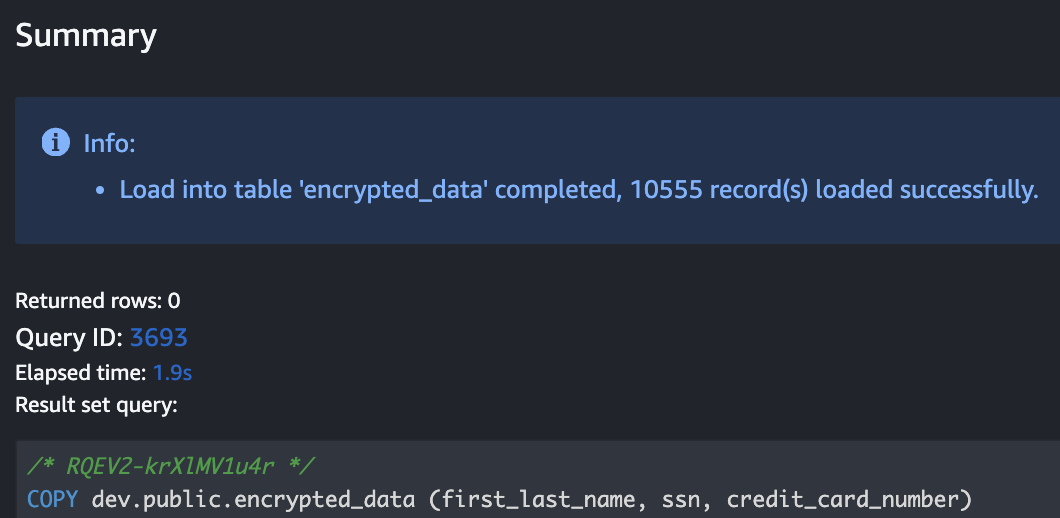

- Load Data from S3. Use the COPY command to ingest data from S3 into your Redshift table.

Note: Replace the REGION, the S3 object URI, and IAM_ROLE with the appropriate values.

-- Load data

COPY dev.public.encrypted_data (first_last_name, ssn, credit_card_number)

FROM 's3://reb7v445miifapfm-glue-spark-test/sample-pci-data.csv_encrypted.csv'

IAM_ROLE 'arn:aws:iam::111122223333:role/service-role/AmazonRedshift-CommandsAccessRole-20241223T103856'

FORMAT AS CSV DELIMITER ','

QUOTE '"'

IGNOREHEADER 1

REGION AS 'us-east-2'

After loading the data into Redshift, you should see results similar to the following.

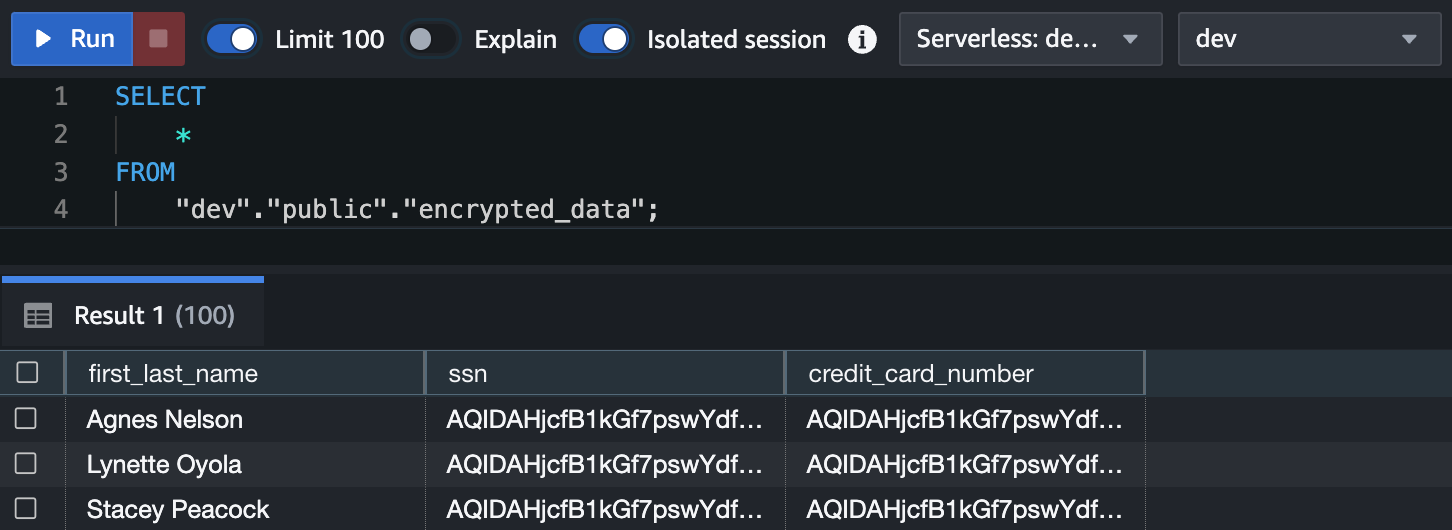

- Query the raw encrypted data to verify successful ingestion.

-- Query table

SELECT

*

FROM

"dev"."public"."encrypted_data";

The query should return the results shown below.

Step 2: Set Up AWS Lambda for Decryption (Go Function)

AWS Lambda is an efficient way to decrypt sensitive columns dynamically. In this step, we’ll implement the decryption logic using Go, chosen for its performance and low cold-start latency.

Building the Lambda Function for Decryption

The data is encrypted client-side using AWS KMS. To decrypt it, the Lambda function will:

- Parse the encrypted value into DEK (Data Encryption Key) and ciphertext components.

- Decrypt the DEK using AWS KMS.

- Use the decrypted DEK to decrypt the ciphertext.

This function is written in Go, using the

provided.al2023runtime for its efficiency.

Lambda Function Configuration

- Runtime: provided.al2023

- Memory: 1024 MB

- Timeout: 15 minutes

- Architecture: arm64

Execution Role: Attach an IAM role with kms:Decrypt permissions for the KMS key used during encryption.

You can download the Lambda function source code here.

Note: The code might need further optimization to reduce the number of KMS API calls.

Deploy the Lambda Function

Compile and deploy the Lambda function code. I used the S3 bucket lambda-code-<your-account-id>-<unique-id> to host the function’s code, you can do the same thing if you wish.

To deploy the Lambda function:

- Compile the Go code for Lambda.

# Build the Go executable for Lambda

go mod tidy

GOOS=linux GOARCH=arm64 go build -tags lambda.norpc -o bootstrap main.go

# Zip the executable

zip main.zip bootstrap

- Upload the zip file to an S3 bucket.

aws s3 cp main.zip s3://<S3_BUCKET_NAME_TO_REPLACE>

- Update the Lambda function code.

aws lambda update-function-code \

--region <REGION_TO_REPLACE> \

--function-name redshift-decrypt \

--s3-bucket <S3_BUCKET_NAME_TO_REPLACE> \

--s3-key main.zip

After deploying, you should see output similar to this.

{

"FunctionName": "redshift-decrypt",

"FunctionArn": "arn:aws:lambda:us-east-2:111122223333:function:redshift-decrypt",

"Runtime": "provided.al2023",

"Role": "arn:aws:iam::111122223333:role/service-role/redshift-decrypt-role-le5rtnrf",

"Handler": "hello.handler",

"CodeSize": 5868679,

"Description": "",

"Timeout": 900,

"MemorySize": 1024,

"LastModified": "2024-12-25T15:45:10.000+0000",

"CodeSha256": "e9N44L0yBqPK3m1mnidBOH5c7OKzjHxqrAxXnEWezw0=",

"Version": "$LATEST",

"TracingConfig": {

"Mode": "PassThrough"

},

"RevisionId": "c001d247-1b52-45ce-b88a-347b69c0774e",

"State": "Active",

"LastUpdateStatus": "InProgress",

"LastUpdateStatusReason": "The function is being created.",

"LastUpdateStatusReasonCode": "Creating",

"PackageType": "Zip",

"Architectures": [

"arm64"

],

"EphemeralStorage": {

"Size": 512

},

"SnapStart": {

"ApplyOn": "None",

"OptimizationStatus": "Off"

},

"RuntimeVersionConfig": {

"RuntimeVersionArn": "arn:aws:lambda:us-east-2::runtime:67a272559b009fdf061da2b34842665aff0c25b2842a83c73d494cd85a1fac21"

},

"LoggingConfig": {

"LogFormat": "Text",

"LogGroup": "/aws/lambda/redshift-decrypt"

}

}

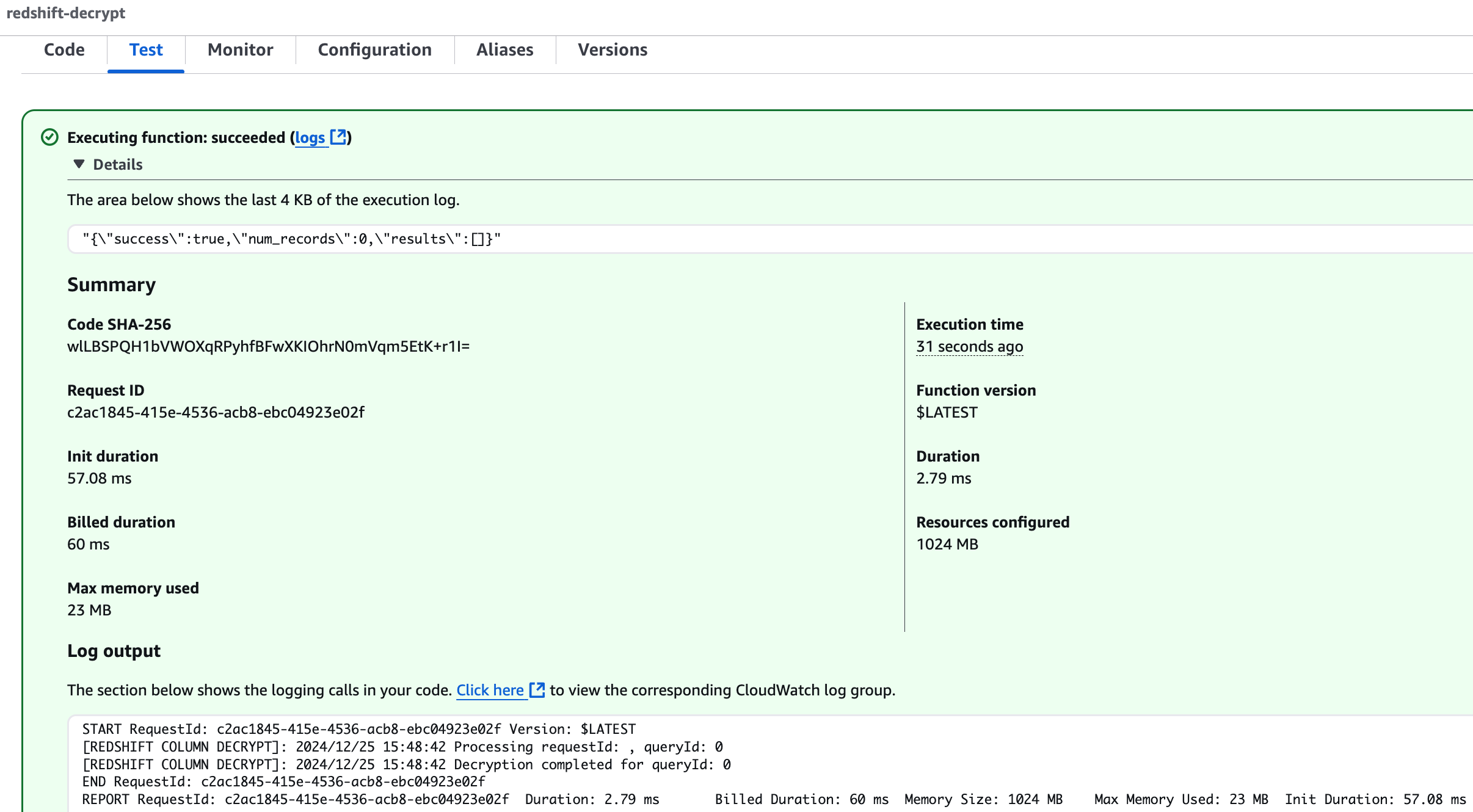

You can test the function from the Lambda console to ensure it works as expected.

Step 3: Query and Decrypt Specific Columns

With the Lambda function set up, you can integrate it with Redshift using User Defined Functions (UDFs). Redshift UDFs allow you to call the Lambda function for decryption directly within SQL queries.

Create a Redshift UDF

Define the UDF in Redshift to invoke the Lambda function. Use EXTERNAL FUNCTION (documentation) to link the decryption function with the IAM role you created earlier. Update the IAM role and Lambda function ARN to match your setup.

CREATE OR REPLACE EXTERNAL FUNCTION decrypt_column(encrypted_value TEXT)

RETURNS TEXT

STABLE

IAM_ROLE 'arn:aws:iam::111122223333:role/service-role/AmazonRedshift-CommandsAccessRole-20241223T103856'

LAMBDA 'arn:aws:lambda:us-east-2:111122223333:function:redshift-decrypt';

Query with Decryption

Run a query to decrypt sensitive columns in real time.

-- Query encrypted columns

SELECT

first_last_name,

decrypt_column(ssn) AS decrypted_ssn,

decrypt_column(credit_card_number) AS decrypted_credit_card_number

FROM "dev"."public"."encrypted_data";

The query dynamically decrypts the specified columns, leaving others untouched. This ensures sensitive data is decrypted only when needed.

You should see results similar to the following.

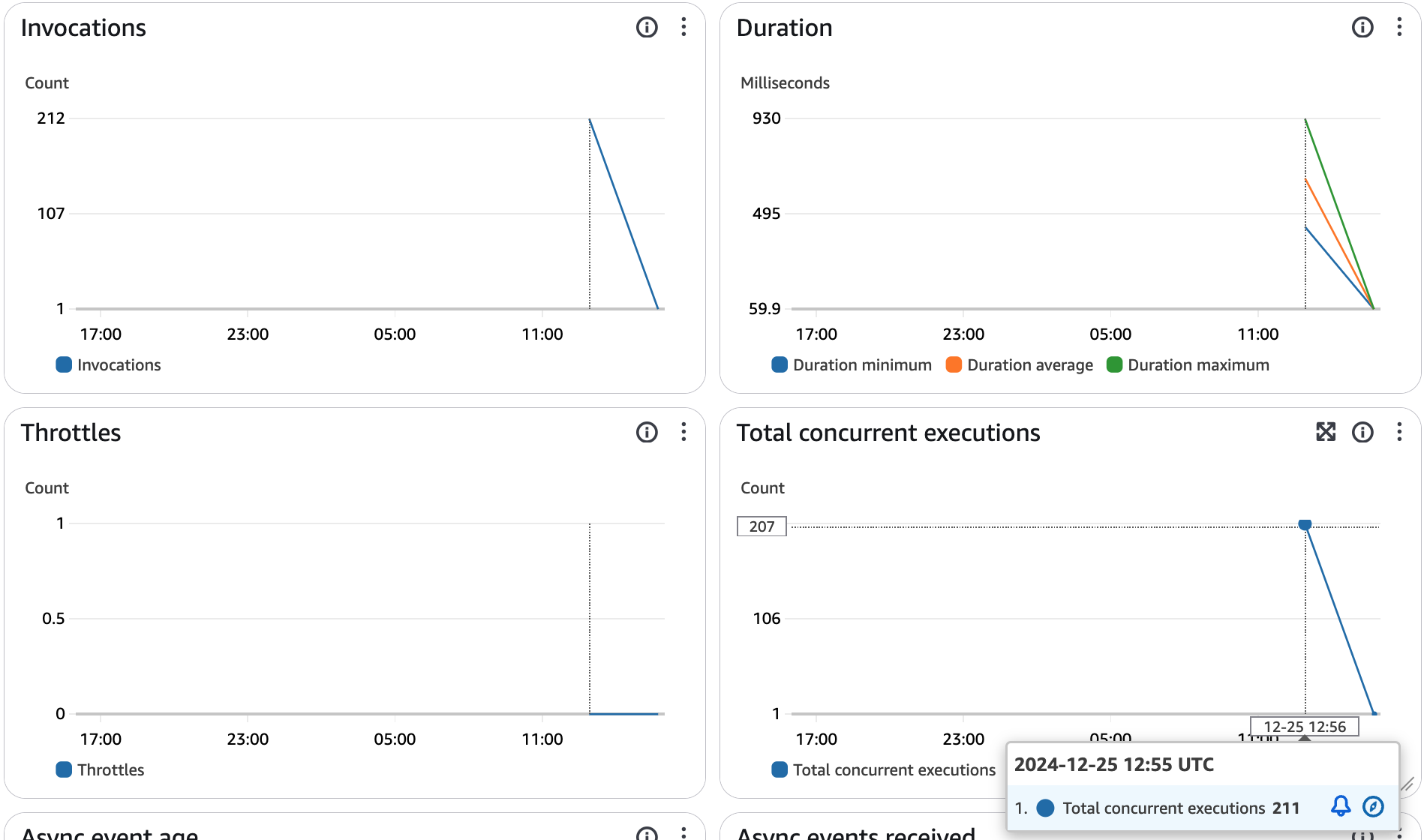

Monitor Lambda Invocations

Check the Lambda function metrics and observe that it is being called multiple times. This behavior is expected, as Redshift sends a maximum payload of 5 MB in a single batch request for a single function invocation.

Conclusion

Combining the analytics power of Amazon Redshift with the flexibility of AWS Lambda allows you to securely and efficiently handle encrypted data. This approach ensures compliance and security without sacrificing performance, even for large-scale analytics.

In this series, we covered how to implement column-level encryption during data ingestion, process data securely, and dynamically decrypt it for analytics. These steps provide a solid foundation for enhancing data security in your workflows.

If you have any questions about this series or want to share how you’re implementing encryption in your projects, feel free to reach out.

Stay secure!